The area of land affected by extreme heatwaves is expected to double by 2020 and quadruple by 2040, and there’s no way we can stop it happening according to a new paper by Dim Coumou and Alexander Robinson – Historic and future increase in the global land area affected by monthly heat extremes (Environmental Research Letters, open access). However, the researchers find that action to cut emissions can prevent further dramatic increases in heat extremes out to the end of the century.

The area of land affected by extreme heatwaves is expected to double by 2020 and quadruple by 2040, and there’s no way we can stop it happening according to a new paper by Dim Coumou and Alexander Robinson – Historic and future increase in the global land area affected by monthly heat extremes (Environmental Research Letters, open access). However, the researchers find that action to cut emissions can prevent further dramatic increases in heat extremes out to the end of the century.

The paper’s made headlines around the world — see The Guardian, Independent, and Climate Central — most focussing on the inevitability of more, and more intense, heat events in the near future. Dana Nuccitelli at The Guardian provides an excellent discussion of the science behind the new paper so, to avoid reinventing the wheel, I’m going to focus on a fascinating chart from the paper, and then ponder the implications for climate policy.

Coumou and Robinson define heat events in terms of their departure from the statistical distribution of all temperatures for any given part of the earth’s surface. If, like me, it’s a long time since that stats course at uni, you might need reminding that for things — like temperatures at any given place — that are “normally distributed”, then the frequency of given events is described by a bell curve. Here’s Wikipedia’s explanation, and graph:

The standard deviation — given the greek letter sigma — of a normal distribution is a measure of the variation, or spread, of events — how often they are likely to happen. By definition, 68% of events will fall in one standard deviation, 95% within 2-sigma, and 99.8% within 3-sigma. For temperatures, high temperature events fall under the right hand end of the curve, and cold under the left. Coumou and Robinson worked out how often 3-sigma heat events occurred during a period when the climate was relatively stable — from 1950 to 1980 — and then worked out how it has changed since then, and how an ensemble of models suggest it will change in the future. They found that the frequency and area of earth’s surface covered by 3-sigma events increased significantly over the last 30 years ((Confirming Jim Hansen’s findings from last year, although using different statistical techniques (see RealClimate for more discussion).)), and then how they are likely to change in the future under two emissions scenarios — one low, one “business as usual”.

The effect of warming is to move the whole bell curve towards the right, so that very rare events — greater than 3-sigma — become much more common. Here’s what the paper says:

The projections show that in the near-term such heat extremes become much more common, irrespective of the emission scenario. By 2020, the global land area experiencing temperatures of 3-sigma or more will have doubled (covering ~10%) and by 2040 quadrupled (covering ~20%). Over the same period, more-extreme events will emerge: 5-sigma events, which are now essentially absent, will cover a small but significant fraction (~3%) of the global land surface by 2040. These near-term projections are practically independent of emission scenario. [my emphasis]

5-sigma events are very rare — “essentially absent” — in an unchanging climate, but as heat accumulating in the system stacks the dice (pace Hansen), they begin to happen – infrequently at first, but then more and more often as warming progresses. In a high emissions scenario:

By 2100, 3-sigma heat covers about 85% and 5-sigma heat about 60% of the global land area. The occurrence-probability of months warmer than 5-sigma reaches up to 100% in some tropical regions. Over extended areas in the extra-tropics (Mediterranean, Middle East, parts of western Europe, central Asia and the US) most (>70%) summer months will be beyond 3-sigma, and 5-sigma events will be common.

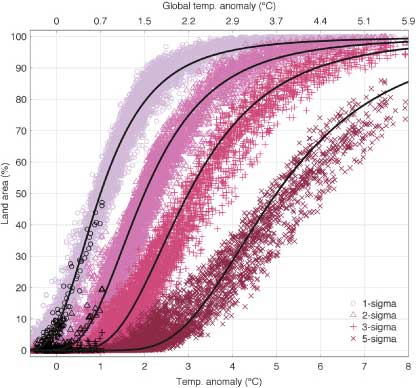

Figure 4 from the paper summarises the findings very nicely:

Land area is plotted on the vertical axis, and mean summer temperature across the bottom. Global temperature anomaly corresponding to those summer temps is given across the top. The black circles/triangles/crosses show the measured increase in land surface area for 1, 2 and 3-sigma events, and the coloured versions the modelled projections. We’re already seeing an increase in 3-sigma events, and it won’t be long before 5-sigma events start to show up.

So far, so technical. What does this mean in practical, policy-relevant terms?

Heatwaves are going to happen more often, they are going to become more widespread, and they are going to become more intense — unimaginably hot, for those of us who grew up in the 1950s to 1980s, the reference period for this work.

We’re already seeing this happen, and we can expect it to get much worse over the next few decades. In other words, this is not a hypothetical problem for another generation to deal with.

It is going to be very important to plan at a personal, local, regional and national level to be able to cope with extreme heat when it happens. This will be particularly important in parts of the world where extreme summer heat is not currently common. Increasing heat will worsen droughts and increase pressures on water supplies.

Developing resilience to heat extremes is something that needs to be done now, because what happens over the next 30 years is already baked in ((Ahem. Sorry.)). The climate commitment is going to bite hard, before we can do anything to prevent things getting even worse.

There is good news, however, and that is that if the global community manages to cut emissions steeply — the scenario modelled by Coumou and Alexander (RCP 2.6) effectively means transitioning to zero emissions by 2070 — then we can avoid the worst impacts.

These are the classic dimensions of planning to deal with climate change: we have to cut emissions (mitigate) and prepare for the worst (adapt). One without the other is policy madness. We can also see that the longer we delay both approaches, the costlier it will be to deal with the results.

Excellent article Gareth, and an excellent paper by Coumou and Robinson. This reminds me of what Professor Sir Robert Watson alluded on in his Union Frontiers of Geophysics Lecture at the AGU meeting in San Francisco in december 2012: http://www.youtube.com/watch?v=Yaf0DGVAJAg#t=09m24s

No matter what we do today, the warming trend will most likely not respond any differently in the coming two decades. It will probably continue at its approximately 0.2 deg C pr decade, but it is the decades thereafter that we statistically will start to see the changes if we were to freeze todays 400 ppm in the atmosphere for the coming decades vs. the more likely scenario where we continue pouring 10 Gt of C into the atmosphere every year, where approx 54% of this stays in the atmosphere, 29% is being dissolved in the ocean and 25% is being utilised by land plants.

Not only are we certain that things are going tits up with the trajectory we are on at the moment, but if we were to phase out fossil fuels in a swift manner, there would still be deniers out there that would use the argument that we are tossing our money out the window because, “hey see, the temperature is still increasing even though emissions are going down” or “Hey, see, the temperature is still increasing even though the CO2 concentration in the atmosphere is constant”, therefore “we are not affecting the climate”. Climate deniers in general lack the ability to grasp just how sluggish the climate system is, and this will not change whatever happens the coming decades. There will still be Ostriches out there…

Climate deniers are worse than preschoolers when someone tries to take their toys off them.

The statistical analysis of the future climate situation always seems to be worse that the climate models. This one of the Arctic iceloss shows a very fast decline. https://sites.google.com/site/arctischepinguin/home/piomas I think to climate models are too cautious and things happen much faster than predicted.

A lot of the science is known and certain. The biggest problem is putting a time scale to it. .

At some point, possibly when civilization starts experiencing this large increase in what people used to see as the most extreme heat events coupled with the appearance of the new extreme 5 sigma events, it will become politically possible to attempt to limit the damage.

At that point people won’t be interested in accepting that there is “no way we can stop it from happening”.

When debates about geoengineering occur, eg: such as this one held recently at Harvard, the stated position coming from all involved is that it would be madness to attempt to employ some of these geoengineering ideas such as reflecting enough solar radiation back out into space to compensate for the warming effect of the extra GHG in the atmosphere which would result in quickly returning the temperature of the planet to what it was pre-industrial or to whatever temperature was desired, without at the same time embarking on radical GHG emission reductions aimed at stabilizing the composition of Earth’s atmosphere or returning it to some previous composition.

Supposing that it is a possibility that political will to aim to stabilize Earth’s atmosphere could exist, and given that damage from the warming that had happened to that point could actually be unacceptable, which is seeming to be increasingly likely, there seems to be a possibility that there will be things we can do to turn the clock back.

The common critique at this point is that no one has come up with an idea on how to turn the clock back on ocean acidification. This effectively rules out any consideration of using geoengineering as a substitute for stabilizing the composition of Earth’s atmosphere at an acceptable level of GHG, but it does not rule out geoengineering.

The original Crutzen paper which legitimized discussion of geoengineering (here) suggested that at the time of writing, geoengineering might already be a necessity due to the massive amount of tropospheric aerosols that an increasingly prosperous formerly developing world will be removing from their total emissions of pollutants. Crutzen proposed deliberate emission of stratospheric aerosol to replace the reflective properties of the supposedly soon to be declining trophospheric aerosols, i.e. changing the mix of what is emitted, given things had gone this far, to keep the planet from warming beyond what civilization could stand.

A Harvard magazine article on the recent geoengineering debate held there is here.

San Francisco has declared a ‘state of emergency’ over extreme fires burning now near the Yosemite national park, endangering both the water supply of the city as well as hydro power stations essential for the electric power supply of the metropolis. The fires are 150 miles from the city, but the effects so dangerous that the mayor declared the ‘sate of emergency’.

What will a Sigma 4 or 5 event look like for arid regions like California and the rest of the US south west?

http://www.theguardian.com/world/2013/aug/24/san-francisco-state-emergency-wildfire-yosemite

I rather enjoyed the way Al Gore put things a few days back:

I’m sure you can pick the bit that doubled me over.

Transcript and video here